I was wondering why ConcurrentHashmaps did not support null. A quick look at the code showed, the put method in ConcurrentHashMap throws an explicit NullPointerException after a null check on value.

Listing 1

1. public V put(K key, V value) {

2. if (value == null)

3. throw new NullPointerException();

4. int hash = hash(key.hashCode());

5. return segmentFor(hash).put(key, hash, value, false);

6. }

Well probably that meant, the passed value could be accessed inside the ConcurrentHashMap without expecting it to be null at any point, during the code flow through the ConcurrentHashMap. I checked the usage of passed in value in ConcurrentHashMap. There was no evidence which would show a possibility of NullPointerException occuring, and hence I was tempted to intrigue more.

So I tweeted on twitter 'Why can't Concurrent Hash Maps take null values ?', hoping that someone will reply back with an answer. I got none till date.

A quick google search did not give me any reliable answers and hence I decided to check it out myself. I created a new class ConcurrentHashMap in my test project (using eclipse), under the package, java.util.concurrent. I commented out the null check in put (removed line 2 and 3 in Listing 1). I wrote a small test program (Multi-Threaded) to do some reads and writes, and ran the program. (I ran the program pre pending my custom java.util.concurrent.ConcurrentHashMap class into the bootstrap class loader, using vm argument -Xbootclasspath/p:). Surprisingly I did not get any errors and everything was fine.

I decided to check the performance at this point.

I wrote a sample program with two threads in an infinite loop. One thread was reading (Listing 2) and the other one was writing (Listing 3) onto the same ConcurrentHashMap.

Listing 2. Reader

1. int count = 0;

2. Date startDate = new Date();

3. while (true) {

4. ++count;

5. chm.get("test");

6. if (count % 1000000 == 0) {

7. Date endDate = new Date();

8. System.out.println("Get : " + (endDate.getTime() - startDate.getTime()));

9. startDate = endDate;

10. }

11. }

Listing 3. Writer

1. count = 0;

2. startDate = new Date();

3. while (true) {

4. ++count;

5. chm.put("test", "null");

6. if (count % 1000000 == 0) {

7. Date endDate = new Date();

8. System.out.println("Put : " + (endDate.getTime() - startDate.getTime()));

9. startDate = endDate;

10. }

11. }

This test program was run under two scenarios :

case 1 : With the modified ConcurrentHashMap (with null check on value removed) and Line 5 in Listing 3 being chm.put("test", "null");

case 2 : With the unmodified ConcurrentHashMap (in rt.jar) and Line 5 in Listing 3 changed to chm.put("test", "test");

The numbers are the time taken in millis for 1 million iteratons of each loop shown in Listing 2 and 3.

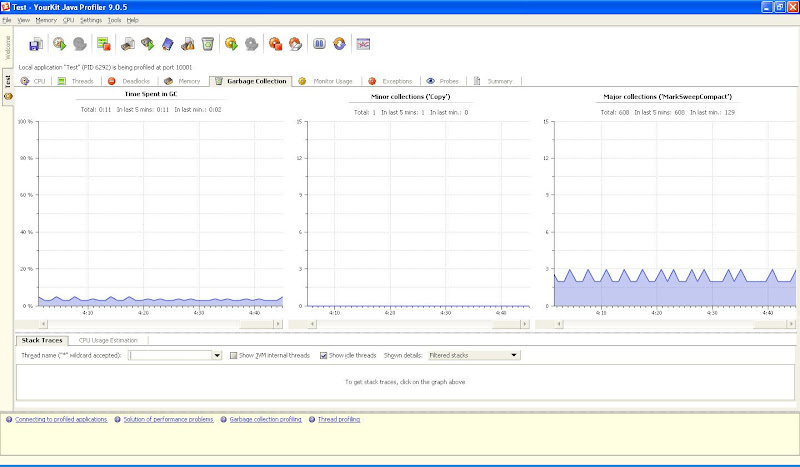

Case 2. Case 1. Get : 47 Put : 313 Get : 62 Get : 344 Put : 140 Put : 297 Get : 47 Get : 328 Get : 47 Put : 312 Get : 62 Get : 328 Put : 125 Put : 281 Get : 47 Get : 313 Get : 47 Put : 313 Put : 125 Get : 343 Get : 47 Put : 312 Get : 47 Get : 344 Get : 47 Put : 313 Put : 125 Get : 219 Get : 47 Get : 125 Get : 46 Put : 468 Get : 47 Get : 265 Put : 125 Put : 282 Get : 47 Get : 344 Get : 47 Put : 281 Put : 125 Get : 344 Get : 47 Put : 266 Get : 47 Get : 359 Get : 47 Put : 281 Put : 125 Get : 297 Get : 47 Put : 297 Get : 46 Get : 328 Get : 47 Put : 297 Put : 125 Put : 281 Get : 32 Get : 328 Get : 46 Put : 281 Put : 110 Get : 329 Get : 47 Put : 297 Get : 47 Get : 312 Get : 47 Put : 281 Put : 140 Get : 328 Get : 47 Put : 297 Get : 47 Get : 328 Get : 47 Put : 313

Results were interesting and it clearly showed that in worse case, gets would be 5 to 10 times slower with a ConcurrentHashMap supporting Null than the original one. The puts were 3 to 5 times slower. Hence its a no go for ConcurrentHashMap to support null values in its current state.

Reasoning :

The method get in Segment implementation of a ConcurrentHashMap takes a lock on the segment itself, if the value of a HashEntry turns out to be null. This is illustrated in Listing 4.

Listing 4.

1. V get(Object key, int hash) {

2. if (count != 0) { // read-volatile

3. HashEntry e = getFirst(hash);

4. while (e != null) {

5. if (e.hash == hash && key.equals(e.key)) {

6. V v = e.value;

7. if (v != null)

8. return v;

9. return readValueUnderLock(e); // recheck

10. }

11. e = e.next;

12. }

13. }

14. return null;

15. }

Now if ConcurrentHashMap does not allows null values then how can the HashEntry have a null value (value is marked volatile in HashEntry). Consider a scenario while a thread may be trying to put a new value on the HashEntry (line 22 in Listing 5) in the put method of the ConcurrentHashMap. The HashEntry object is created but not yet initialized, so that value attribute in HashEntry does not reflects its actual value, but instead reflects null. At this point a reader gets the HashEntry and reads a null for attribute value in HashEntry, thus having a need to recheck with a lock (line 9. Listing 4.).Listing 5.

1. V put(K key, int hash, V value, boolean onlyIfAbsent) {

2. lock();

3. try {

4. int c = count;

5. if (c++ > threshold) // ensure capacity

6. rehash();

7. HashEntry[] tab = table;

8. int index = hash & (tab.length - 1);

9. HashEntry first = tab[index];

10. HashEntry e = first;

11. while (e != null && (e.hash != hash || !key.equals(e.key)))

12. e = e.next;

13. V oldValue;

14. if (e != null) {

15. oldValue = e.value;

16. if (!onlyIfAbsent)

17. e.value = value;

18. } else {

19. oldValue = null;

20. ++modCount;

21. tab[index] = new HashEntry(key, hash, first, value);

22. count = c; // write-volatile

23. }

24. return oldValue;

25. } finally {

26. unlock();

27. }

28. }

This extra check in Line 9 of Listing 4 is very costly (as we already see it in the test results above) and is avoided if a not null value of HashEntry is encountered. In case the null values are allowed this would require to have this lock acquired each time a null value is accessed, hence making the ConcurrentHashMap slower.